Configuration

Vertesia is a bring your own key service which supports many model inference service providers such as OpenAI, Google Vertex AI, AWS Bedrock and many others. Users can configure and use several providers of their choice, either by using Studio, the REST API or the SDK.

Please refer to each provider documentation page to learn how to use it with Vertesia:

For AWS Bedrock and GCP VertexAI, helper scripts to automate and the configuration are available here.

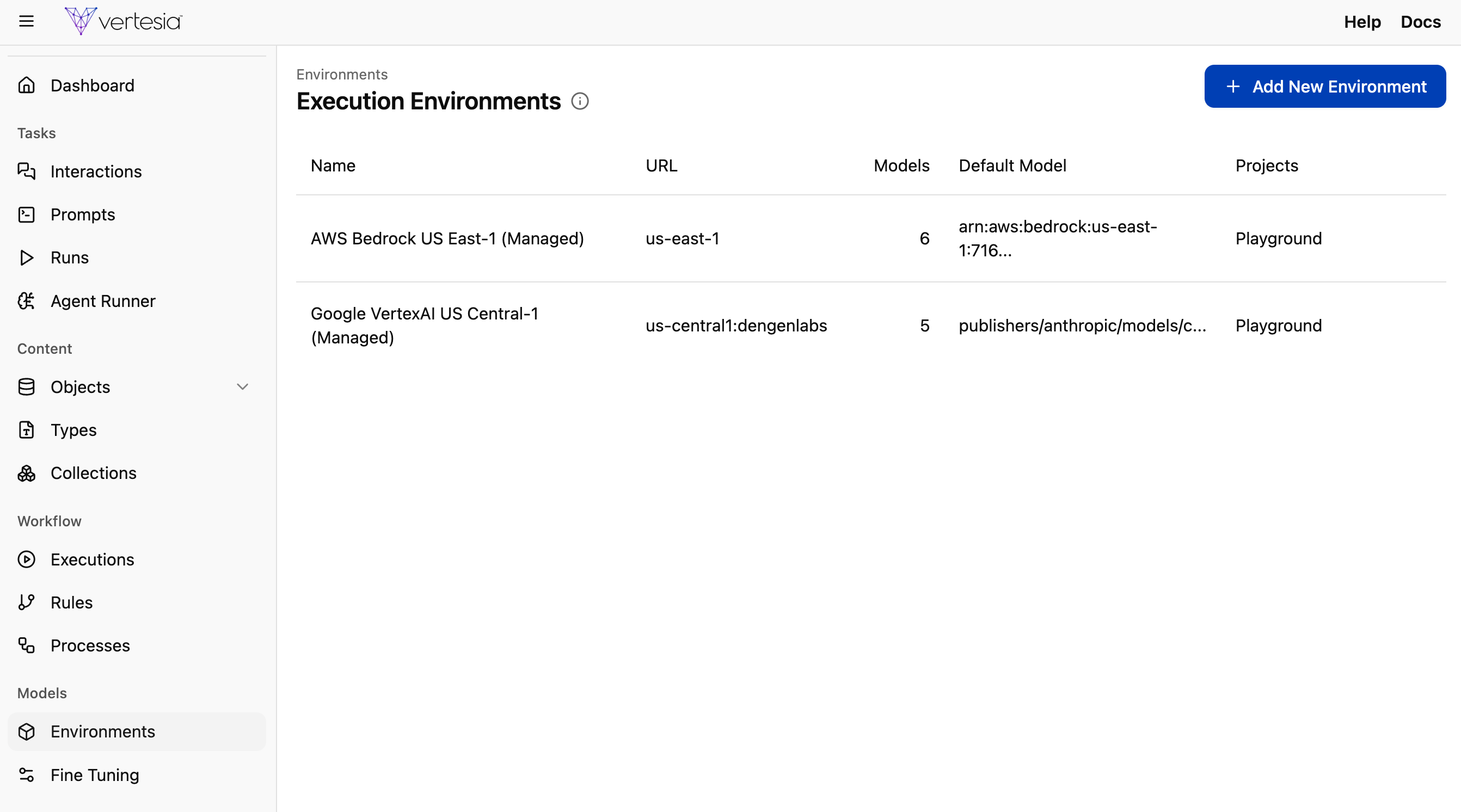

Vertesia Execution Environments

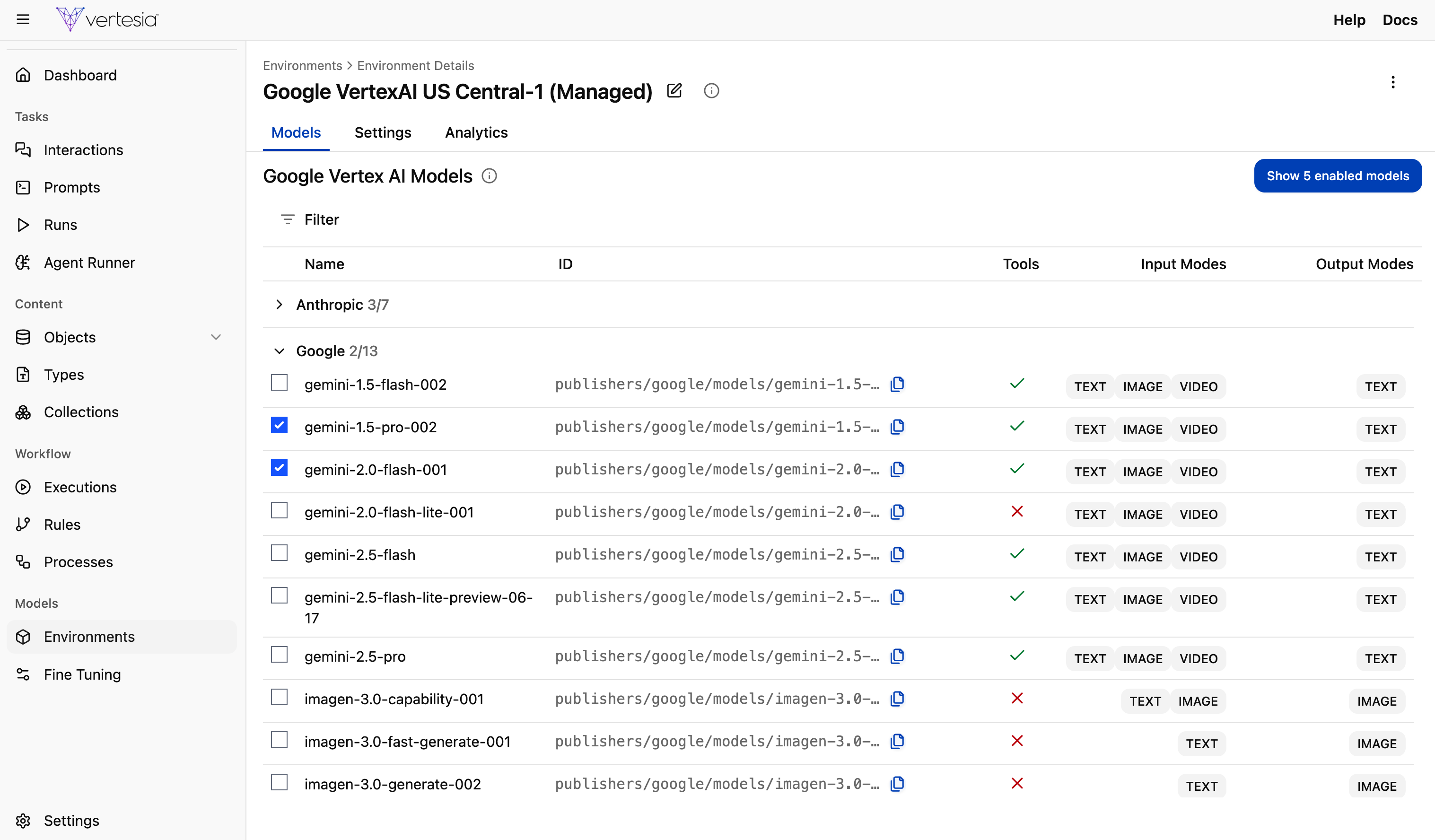

Vertesia introduces the concept of Execution Environment. An environment is configured to use an inference provider and contains the individual models that are available. Vertesia also provides managed environments, including AWS Bedrock and Google Vertex AI, that you can use to get started quickly and try out the platform — just accept the default setting when creating your new project.

Execution Environment

An Execution Environment attributes include:

- An inference service provider

- An API key

- An Endpoint URL

- Available models from the provider that can be enabled

- Enabled models

- Projects within the user's Vertesia organization that can use environment

Code Execution Environment (Daytona Sandbox)

For code execution and advanced agent workflows, Vertesia uses managed sandboxes provided by Daytona. These sandboxes are orchestrated by the execute_shell tool and are created on demand for each workflow run.

To enable Daytona-based code execution:

- Configure a

DAYTONA_API_KEYsecret in your environment using your secret provider. - Optionally set

DAYTONA_API_URLto point to a custom Daytona deployment (defaults tohttps://app.daytona.io/api).

When an agent first calls execute_shell, Vertesia:

- Creates or reuses a sandbox associated with the workflow run.

- Syncs scripts, data files, skills, and documents into the sandbox.

On workflow completion or failure, Vertesia automatically stops Daytona sandboxes for that run to release resources.